Data modernization expert Claire Salling explains three BigQuery backup methods for protecting your data while you work.

A backup strategy for recovering your data is a must-have in the event of a data failure. With Google BigQuery’s snapshot feature, creating backups for your data in BigQuery for archival, disaster recovery, and regulatory compliance is easier and more efficient than ever.

In this article, we’ll start with a look at Time Travel and Automated Replicas and then cover the three main methods for backing up your data in BigQuery:

- Table Exports

- Table Snapshots

- Scheduled Queries

Time Travel and Automated Replicas

Before covering the main backup methods, it’s worth looking at BigQuery’s Time Travel and Automated Replicas features. These two options cover many of the requirements that demand the creation of backups in the first place.

BigQuery Time Travel

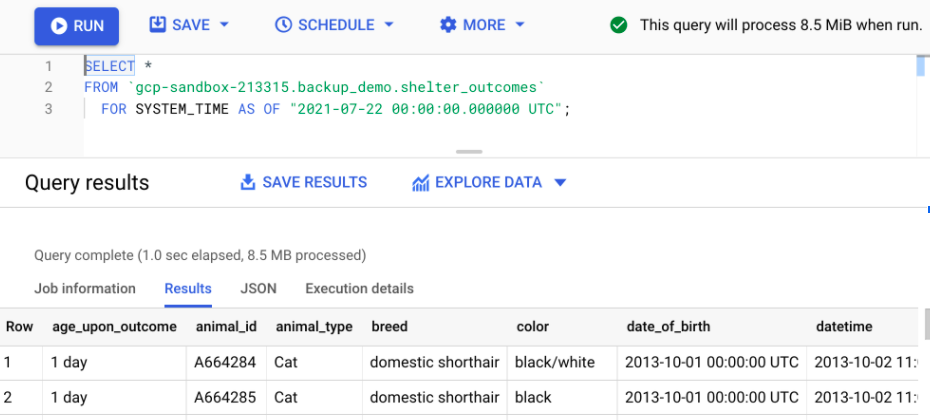

Time Travel allows you to query a table’s state as it was at any time within the last seven days, providing de-facto data backups for a rolling seven-day period.

Time Travel queries use the FOR SYSTEM_TIME AS OF clause to indicate which version of the table you want to retrieve results from. All you, as the user, have to do is to specify the point in time from which you want to pull data.

Figure 1: BigQuery Time Travel allows you to retrieve query results from past versions of tables, even if the data in the table has since changed or been deleted

You can also use Time Travel to restore a table to its previous state, from up to seven days ago. This can be handy if a table was accidentally truncated or if you’re having data validation issues and need to undo a bad update to the table.

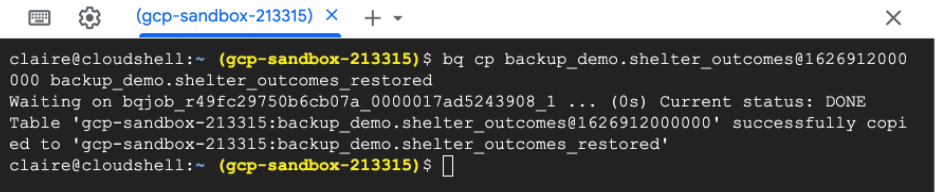

When running a restoration, you can use the BigQuery command-line tool along with table decorators to specify the version of the table you would like to restore.

Figure 2: Use the BigQuery command-line tool and Time Travel decorators to restore previous table versions.

Since Time Travel only backs up data for a rolling seven-day period, if your use case requires backups to be retained for longer, you’ll need to create an additional backup process using one of the methods outlined in later sections.

Automated Replicas

Automated Replicas of your BigQuery data are provided by default.

BigQuery datasets are tolerant of both machine-level and zonal-level failure with no data loss or significant downtime. As a result, there isn’t additional configuration needed on your part to ensure this level of disaster resilience (one of the many benefits of adopting a managed cloud service).

For regional-failure tolerance, BigQuery uses the concept of a multi-region, a location option for your BigQuery data, which spans multiple Google data centers. Tables stored in multi-region are automatically replicated across those different data centers. Data replicated to a different data center is usually about one hour behind the current state of the original data, so in the event of a disaster that brings offline or destroys a regional data center, your data will be, for the most part, recoverable. However, recent updates to the data may be lost.

For customers that store their BigQuery data in a single region, such as us-central1 or us-east4, no automated regional replication occurs, and additional backups are needed to ensure regional-level tolerance, which is what we’ll dig into next.

Three BigQuery Backup Methods

Now, we’ll look at the three main backup methods: table exports, table snapshots, and scheduled queries. The one you use will come down to your use case and needs.

Backup Method One: BigQuery Table Exports

BigQuery provides users the option of exporting single tables to flat files in Google Cloud Storage, effectively creating a point-in-time backup of your data that can be used to restore BigQuery tables to their prior state. You can choose to export to either CSV, newline-delimited JSON, or Avro files.

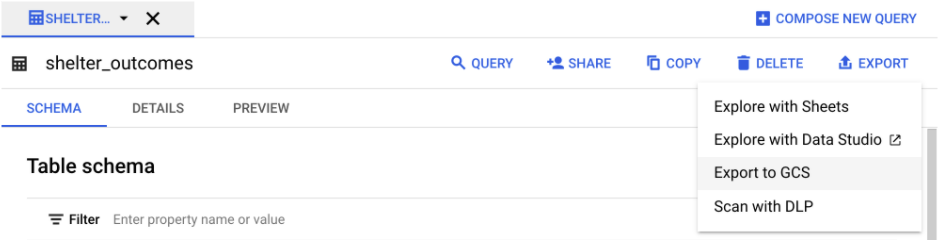

Manual Table Export

You can manually create a table export using the Google Cloud Console:

Figure 3: Exporting BigQuery tables to Google Cloud Storage using the Google Cloud Console

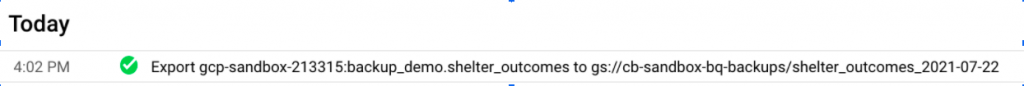

Automated Table Export

You can also programmatically create table exports using the BigQuery API in the event you need automated routine backups.

Figure 4: Table export jobs show up in your job history and count towards your export quotas

Note: While table exports guarantee to preserve the contents of your data, they don’t ensure that your schema, partition key, or other table metadata will be saved. Export the metadata separately and collate it with the data if and when the backup is restored. (Valiappa Lakshmanas provides an excellent overview on how to accomplish this.)

Backup Method Two: BigQuery Table Snapshots

BigQuery table snapshots are exactly what they sound like — they preserve the contents of a table at the time of capture. There are several differences between table snapshots and table exports.

Table snapshots can be effectively housed in BigQuery without other Google Cloud services. This simplifies the IAM needed to access table snapshots and consolidates all of your data warehouse resources using fewer services within the Google Cloud Platform.

Table snapshots also only store the bytes that are different between the base table as it was when the snapshot was taken and the base table as it is today. In contrast, table exports store the full content of the base table at the time of export. For slowly changing tables, this can amount to a significant size (and cost) difference between the two data exports. More on how the size difference impacts your potential storage costs later in the article.

Lastly, table snapshots capture metadata associated with the table, including the table schema and access controls. This is a major convenience compared to using the BigQuery exports, which require you to export the table metadata separately and collate the table metadata and data when restoring the table.

Backup Method Three: BigQuery Scheduled Queries

Scheduled queries in BigQuery allow you to run queries on a periodic basis and then store the results of those queries in a separate table. Scheduled queries can contain both DDL and DML. Additionally, you can parameterize both the query string as well as the destination table in order to implement more sophisticated updates.

Scheduled queries leverage features of the BigQuery Data Transfer Service, which means you do need to enable that service before creating or running scheduled queries in BigQuery.

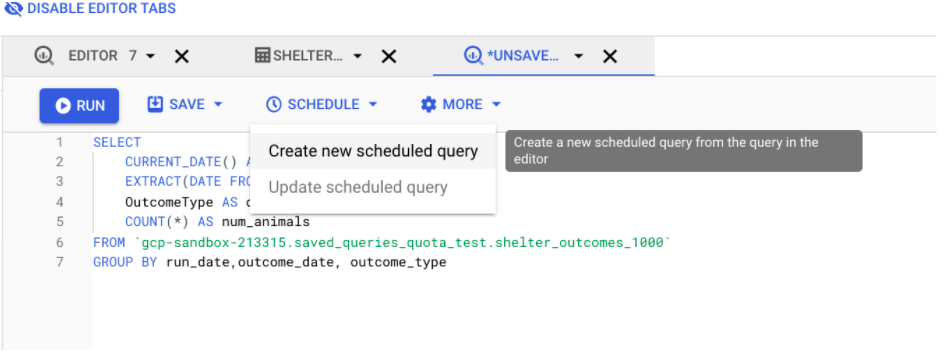

Creating a Scheduled Query

Creating scheduled queries via the interface is simple. For scenarios where you only need a handful of tables to be backed up, it can be a handy way of orchestrating those backups without having to resort to external automation.

Figure 5: Create a scheduled query from the query composer window

The only drawback is that this is potentially the most expensive of the three options. BigQuery storage costs are typically greater than Google Cloud Storage costs. Depending on how your queries are written, you could potentially be capturing more data in these backups than would be stored if you were using Table snapshots.

Comparing BigQuery Backup Costs

To estimate the actual cost differences between these methods, I hypothesized the following scenarios:

- A BigQuery data table that begins the calendar month at 1.03 TB. This table (Table A) needs to have daily backups created which are retained for a rolling 30-day period. Table A is slowly growing over time, by about one percent per day. By the end of the month, it’s reached about 1.39 TB in size.

- A BigQuery data table that begins the calendar month at 1.03 TB. This table (Table B) also needs to have daily backups created, which are retained for a 30-day period. Unlike Table A, Table B is completely overwritten on a daily basis and remains about the same size. By the end of the month, it’s still about 1.03 TB in size.

For all exports to Google Cloud services, data is stored in US multi-region archival storage, which is priced at $0.0012 per GB per month. Data is assumed to be the same size in Google Cloud Storage as it is in BigQuery, although there is often a size difference between data stored in a database vs. data stored in text or binary formats in flat files.

| Backup Method | Table Exports | Table Snapshots | Scheduled Queries |

| Monthly Cost for Slowly Changing Tables | $45 | $105 (+130%) | $740 (+1644%) |

| Monthly Cost for Rapidly Changing Tables | $38 | $618 (+1626%) | $641 (+1687%) |

| Permissions Needed | Read and write permissions to Google Cloud services and export permissions from BigQuery | BigQuery Admin permissions | BigQuery and BigQuery Data Transfer service permissions |

| Automation | Can only be triggered manually via the GUI and must be automated programmatically | Can be automated via the GUI | Can be automated via the GUI |

| Storage Format | Flat files (CSV, JSON, Avro) | BigQuery storage | BigQuery storage |

Table 1: Cost and feature comparisons between BigQuery backup options

Which Backup Method Should You Choose?

Analytical data is often not business-critical in the same way operational data is. In the unlikely event Google Cloud’s built-in protections fail, you can probably tolerate some data loss in BigQuery.

However, if you need to back up your BigQuery data, you’ll need to weigh the cost of backup storage against the ease of implementation. Table exports to Google Cloud Storage will likely be less expensive (provided you choose the correct storage class), but they’re also more difficult to implement and will require a moderate amount of programming knowledge.

Table snapshots are much easier to implement but can potentially cost over 15x what the storage would be for archival exports — a significant cost difference that should be taken into consideration, especially for large tables.

Are you thinking about making BigQuery a part of your strategy? Our Google Cloud Experts can help you decide if this cloud warehousing solution is right for you. Schedule a consultation with us today.